Parse JSON logs with Pipelines

Overview

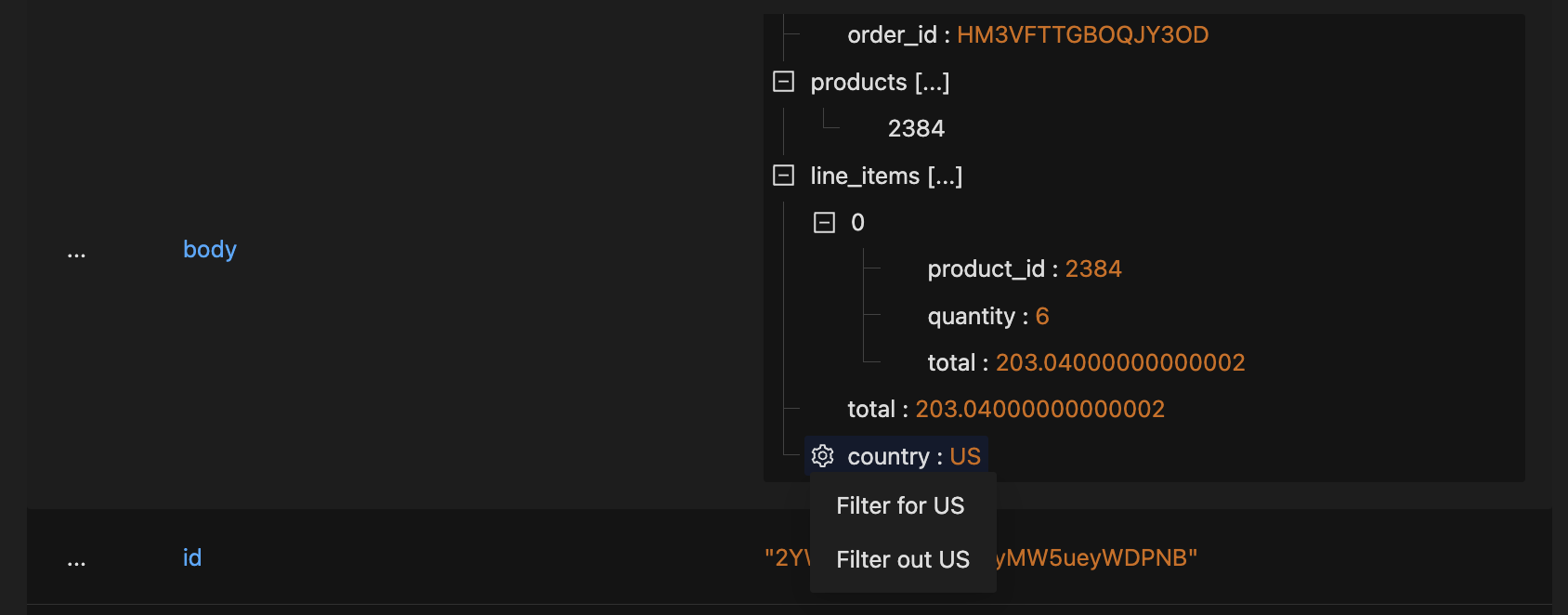

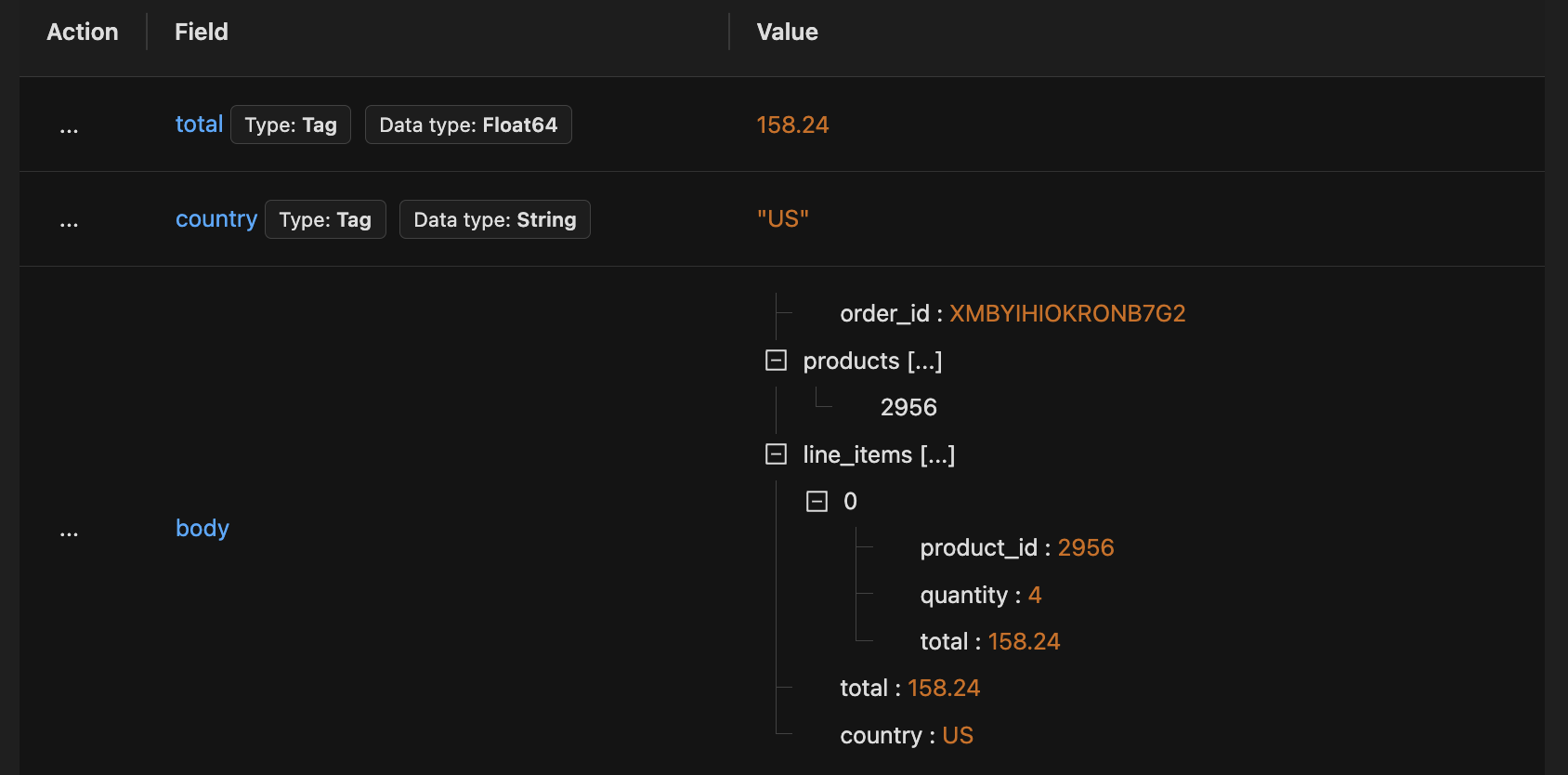

If your logs contain serialized JSON in their bodies, the log detail view in Signoz UI will display the body in a parsed, easy to use structure. You can also filter your logs based on JSON data in the body.

While these powerful features work out of the box, you can take things up a notch by pre-processing your log records to parse interesting fields out of JSON bodies into their own log attributes.

Filtering by log attributes is more efficient and this also unlocks aggregations based on fields extracted from your JSON data.

The parsed attributes can also be used to further enrich your log records. For example, if the serialized JSON contained trace information, you could populate trace details in your log records from the parsed attributes, enabling correlation of your logs to and from corresponding traces.

In this guide, you will see how to parse interesting fields out of serialized JSON bodies into their own log attributes.

Prerequisites

- You are sending logs to SigNoz.

- Your logs contain serialized JSON data in the body.

Create a Pipeline to Parse Log Attributes out of JSON Body

After you have started sending logs with serialized JSON bodies to SigNoz, you can follow the steps below to create a pipeline for parsing log attributes out of the JSON data.

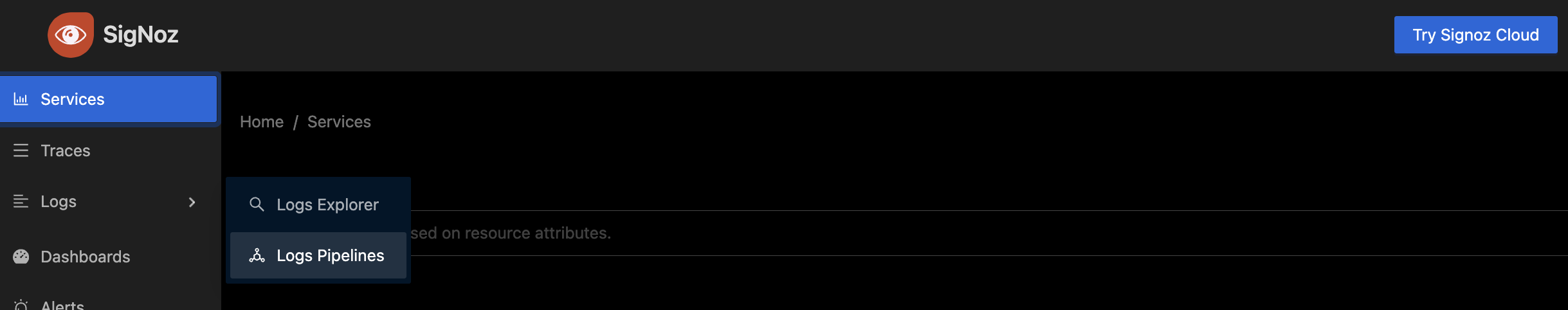

Step 1: Navigate to Logs Pipelines Page

Hover over the Logs menu in the sidebar and click on the Logs Pipeline submenu item.

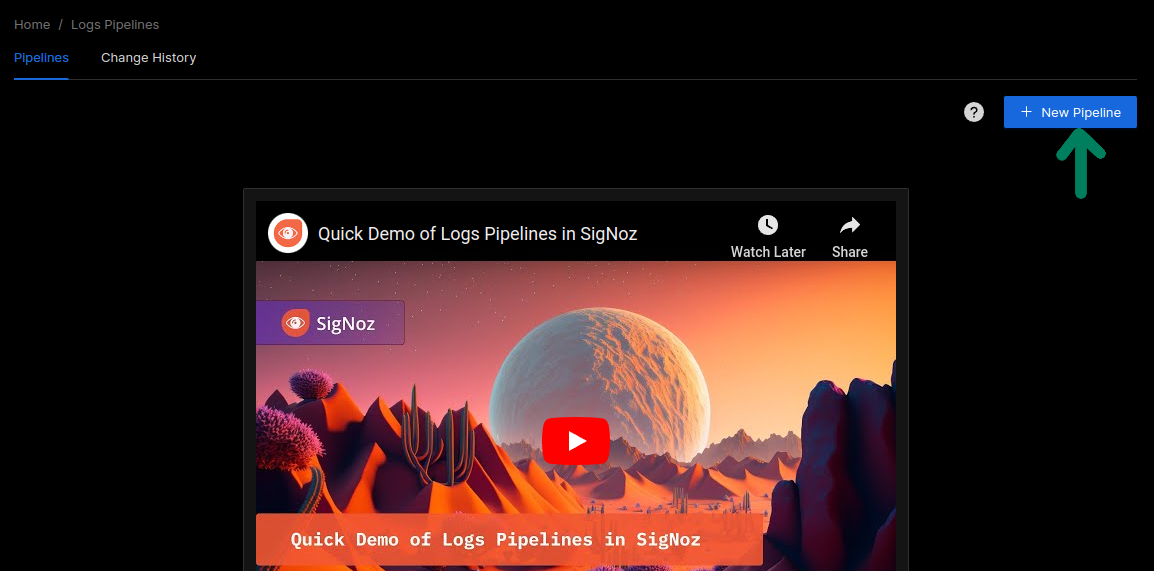

Step 2: Create a New Pipeline

Open the "Create New Pipeline" dialog.

If you do not have existing pipelines, press the "New Pipeline" button.

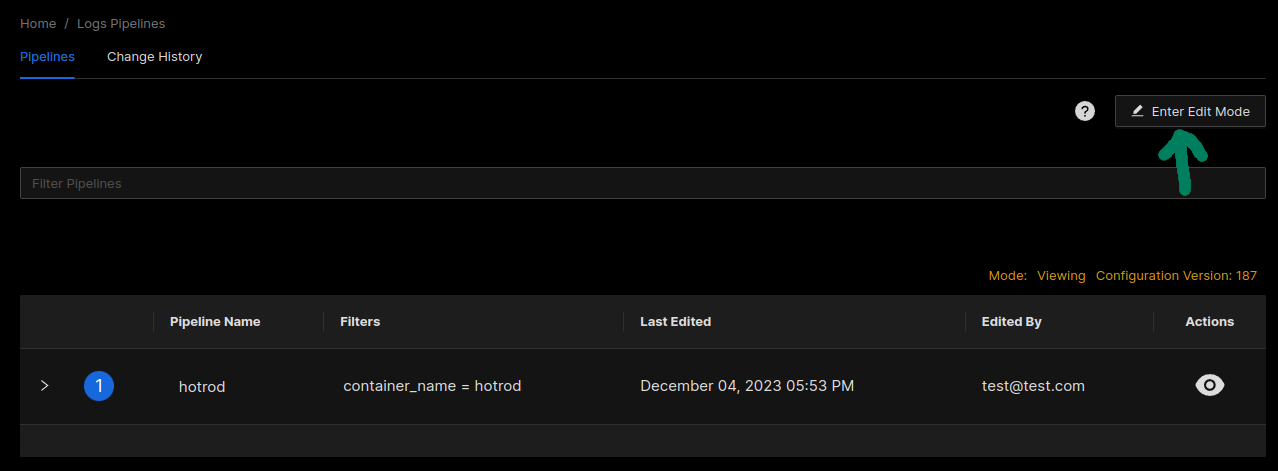

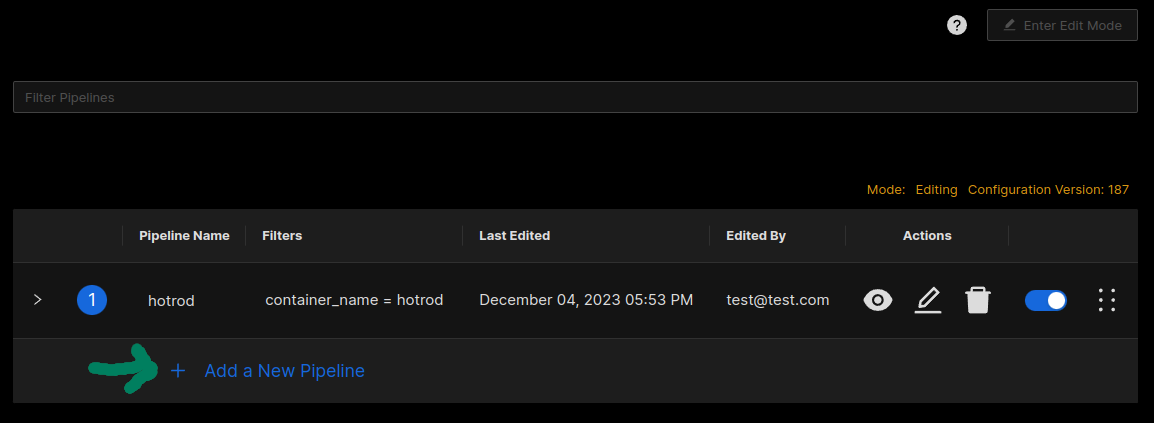

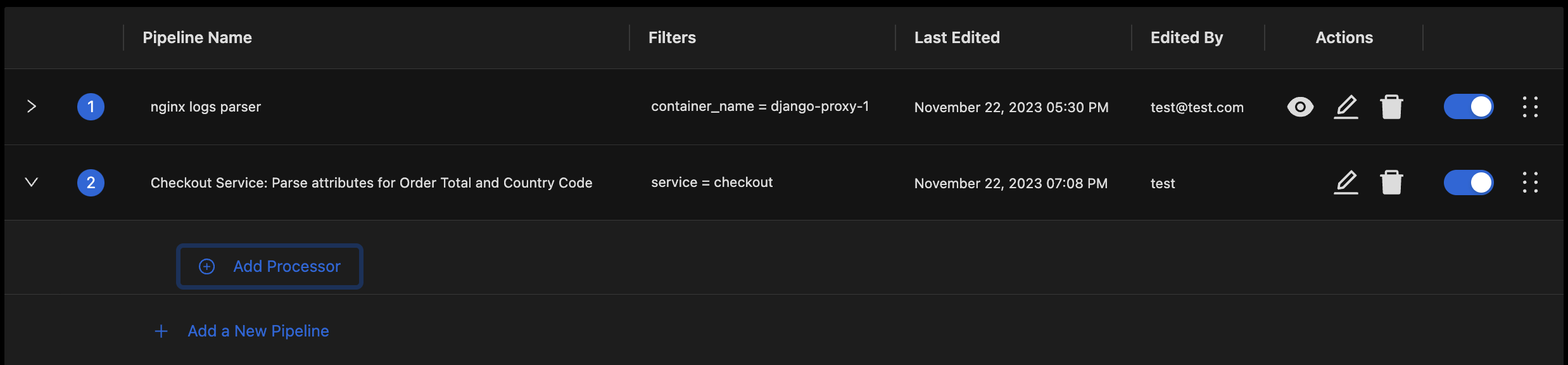

New Pipeline Button If you already have some pipelines, press the "Enter Edit Mode" button and then click the "Add a New Pipeline" button at the bottom of the list of pipelines.

Enter Edit Mode button

Add a New Pipeline button

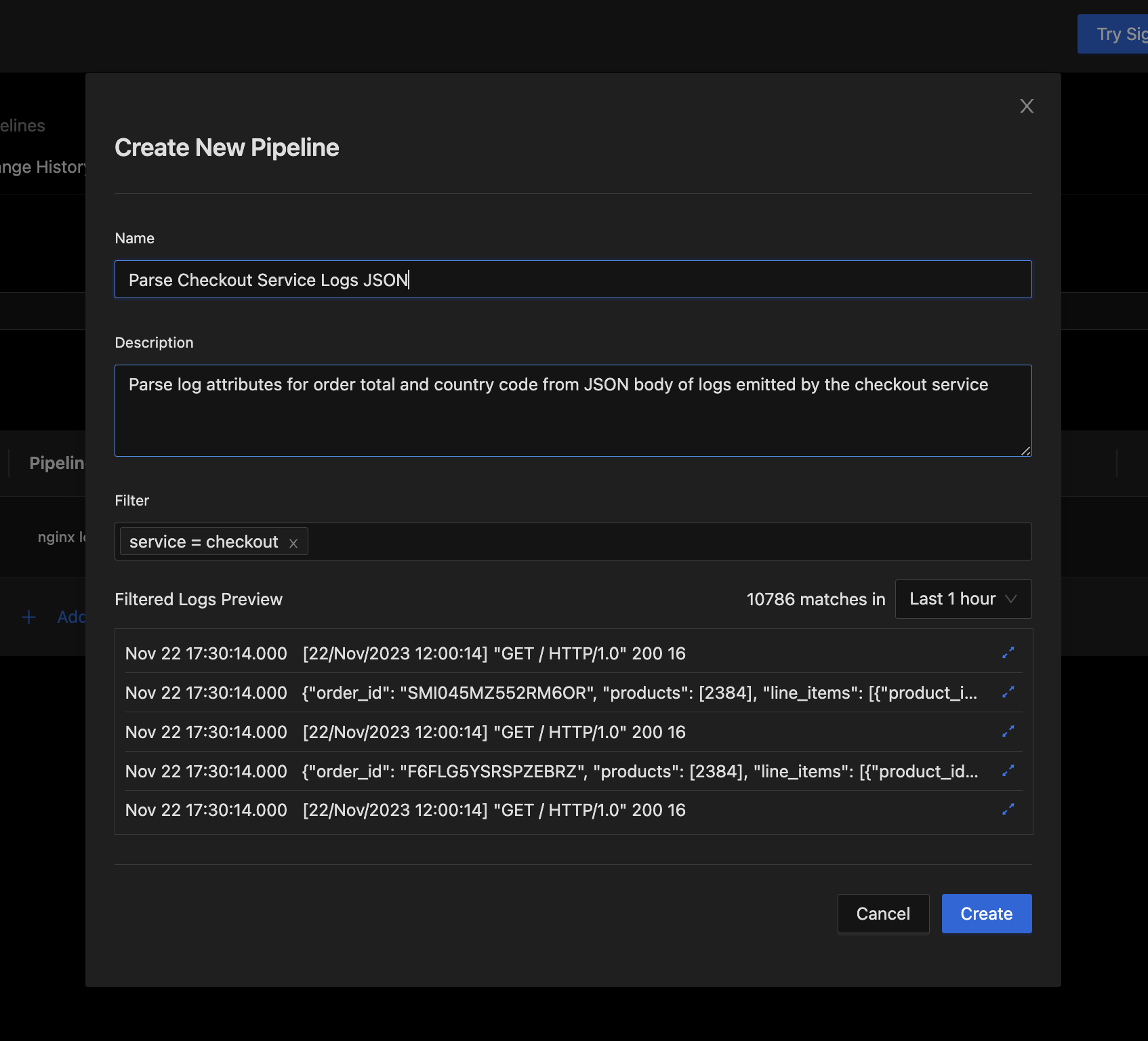

Provide details about the pipeline in the Create Pipeline Dialog.

- Use the Name field to give your pipeline a descriptive short name.

- Use the Description field to add a detailed description for your pipeline.

- Use the Filter field to select the logs you want to process with this pipeline.

Typically, these are filters identifying the source of the logs you want to process.service = checkoutfor example. - Use the Filtered Logs Preview to verify that the logs you want to process will be selected by the pipeline.

Note that while it is not ideal, it is ok if your filter selects other non JSON logs too.

Create New Pipeline dialog

Press the "Create" button if everything looks right.

Step 3: Add Processors for Parsing Desired Fields into Log Attributes

Each added attribute increases the size of your log records in the database. So it is often desirable to parse only a few fields of interest out of the JSON body into their own log attributes.

To achieve this, we will first use a JSON parsing processor to parse the log body into a temporary attribute, then we will move the desired fields from the temporary attribute into their own log attributes, and finally remove the temporary log attribute.

Expand the new Pipeline to add processors to it.

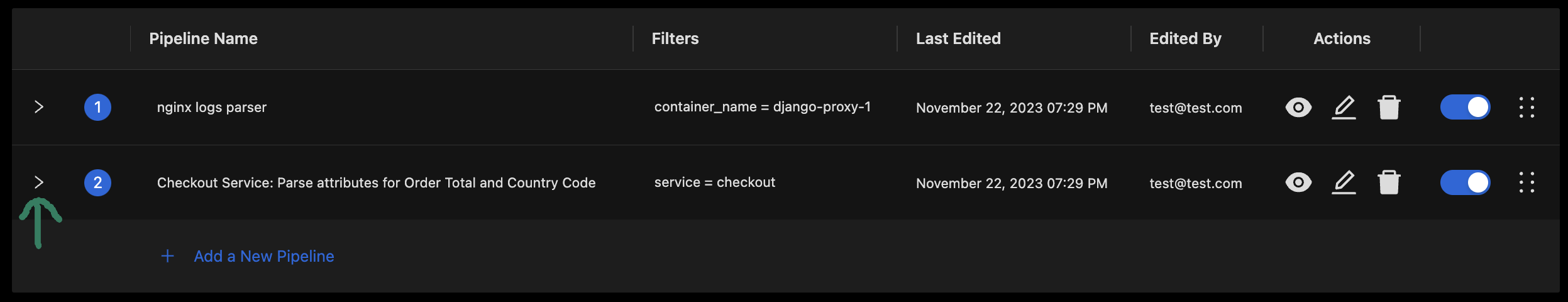

Creating a Pipeline adds it to the end of the Pipelines List. It can be expanded by clicking the highlighted icon.

Expanding a pipeline shows the Add Processor button Add a processor to parse the JSON log body into a temporary attribute.

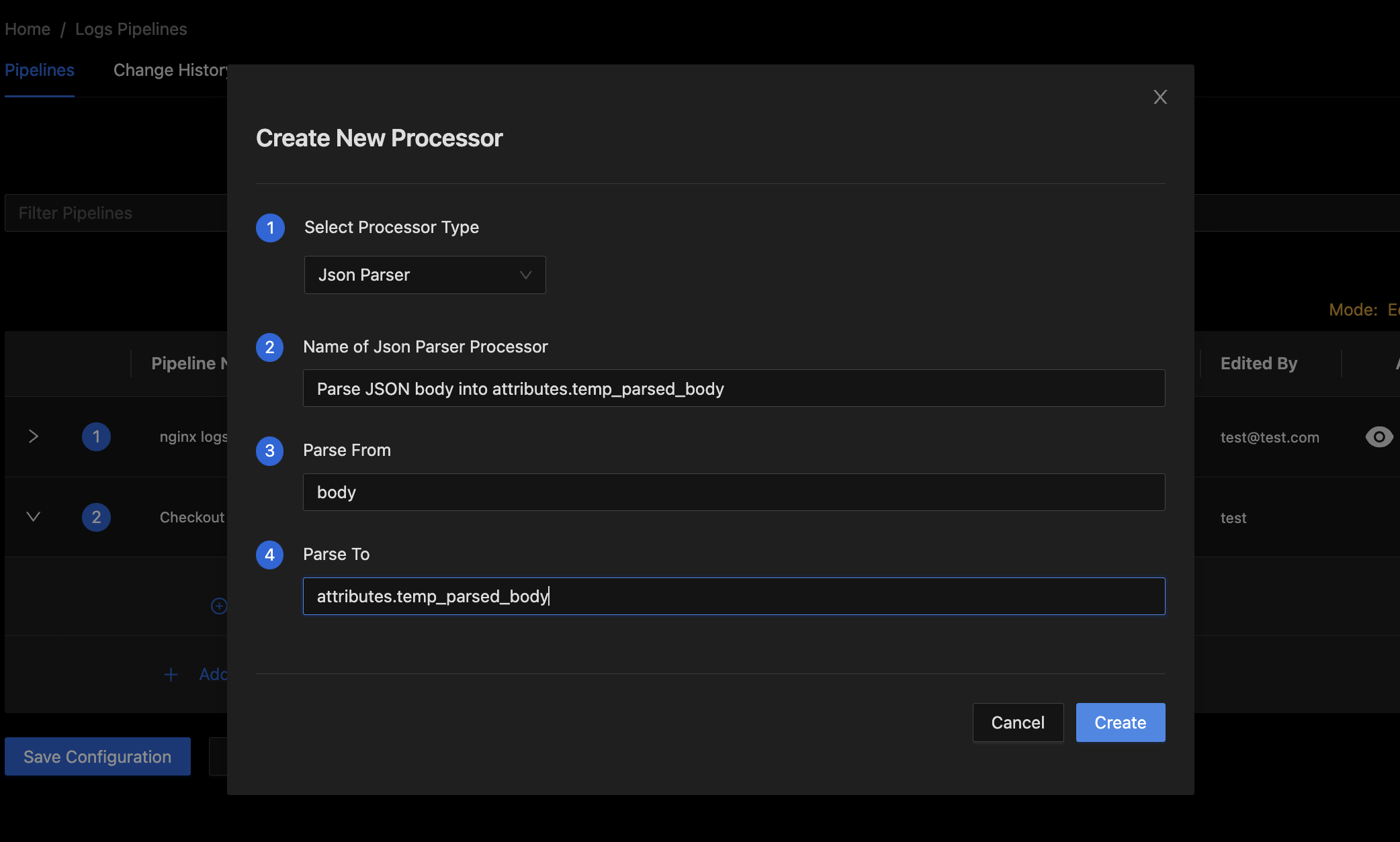

- Click the Add Processor Button to bring up the Dialog for adding a new processor.

- Select

Json Parserin the Select Processor Type field. - Use the Name of Json Parser Processor field to set a short descriptive name for the processor.

- Set the Parse From field to

body - Use Parse To field to define the attribute where the parsed JSON body should be stored temporarily. For example

attributes.temp_parsed_body.

Add New Processor Dialog - Press the Create button to finish adding the processor.

Add Move processors to get desired fields out of the temporary attribute containing parsed JSON into their own log attributes.

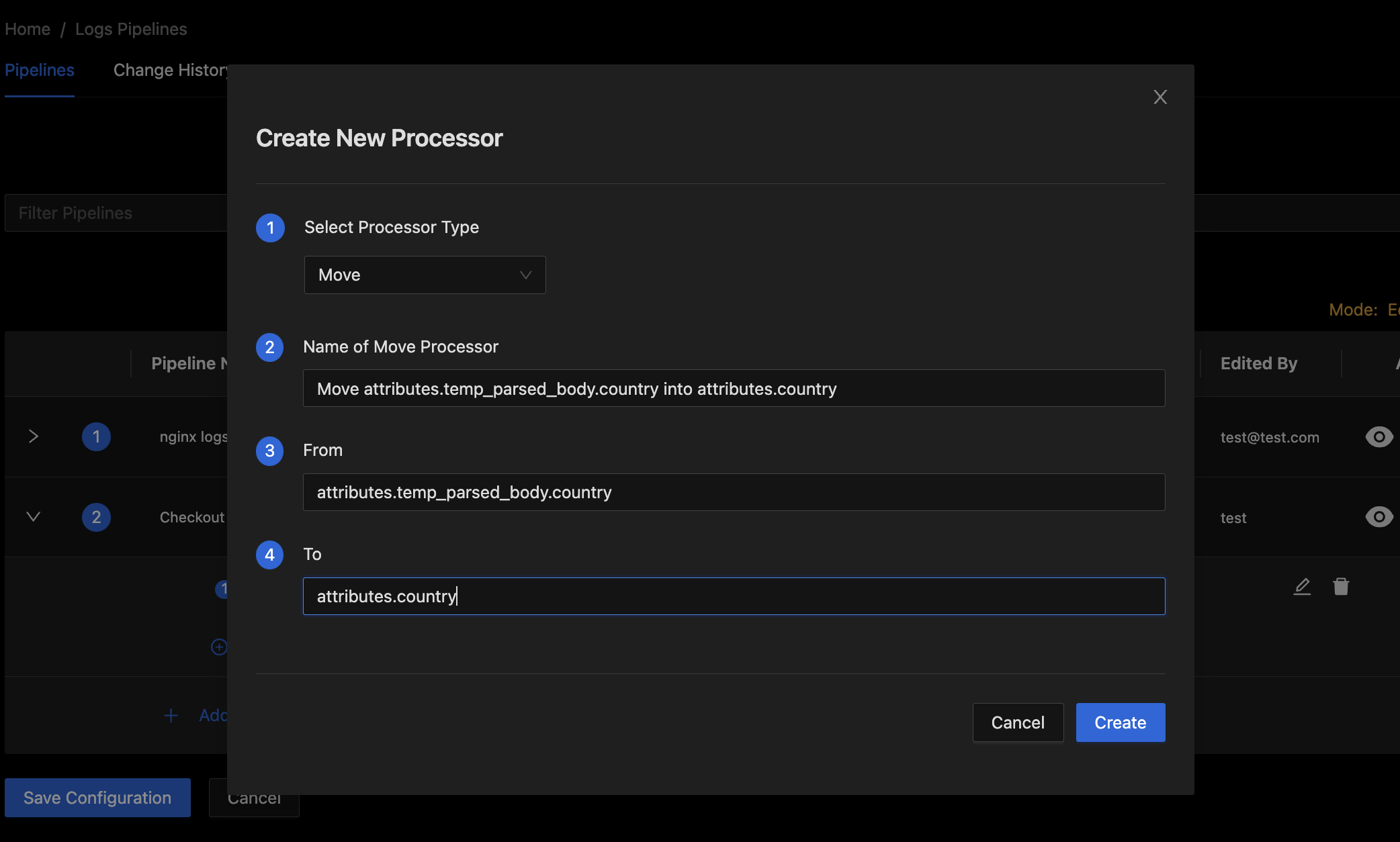

- Click the Add Processor Button to bring up the Dialog for adding a new processor.

- Select

Movein the Select Processor Type field. - Use the Name of Move Processor field to set a short descriptive name for the processor.

- Set the From field to the path of the parsed JSON field to be extracted. For example

attributes.temp_parsed_body.country - Use To field to define the attribute where the JSON field should be stored.

Add Move Processor Dialog - Press the Create button to finish adding the processor.

- Repeat these steps to create a Move processor for moving each desired JSON field into its own log attribute.

Add processor for removing attribute used for temporarily storing the parsed JSON log body.

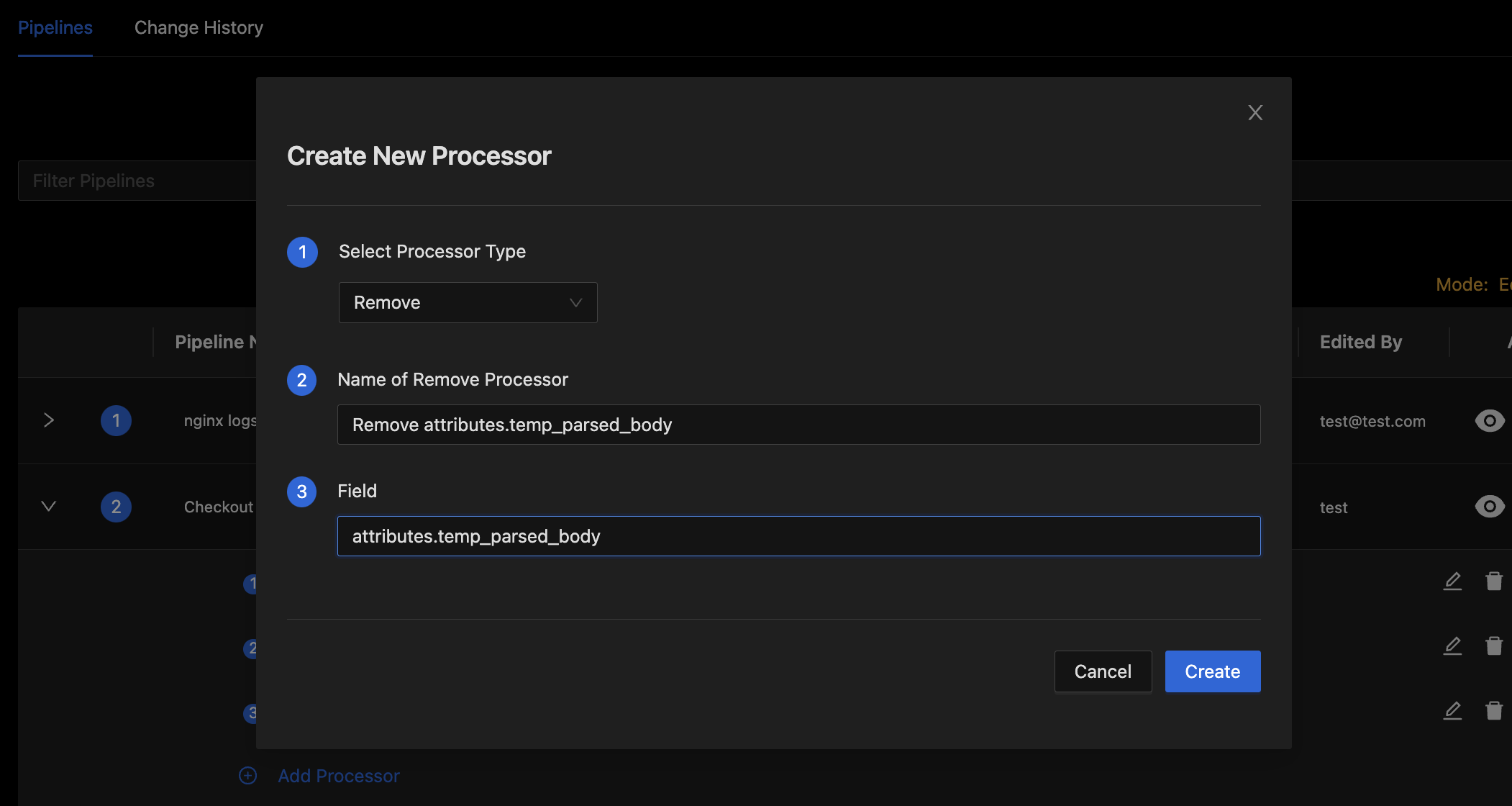

- Click the Add Processor Button to bring up the Dialog for adding a new processor.

- Select

Removein the Select Processor Type field. - Use the Name of Remove Processor field to set a short descriptive name for the processor.

- Set Field input to path of the attribute we used for storing parsed JSON body temporarily. For example

attributes.temp_parsed_body

Remove Processor Dialog - Press the Create button to finish adding the processor.

Step 4: Preview and Validate Pipeline Processing

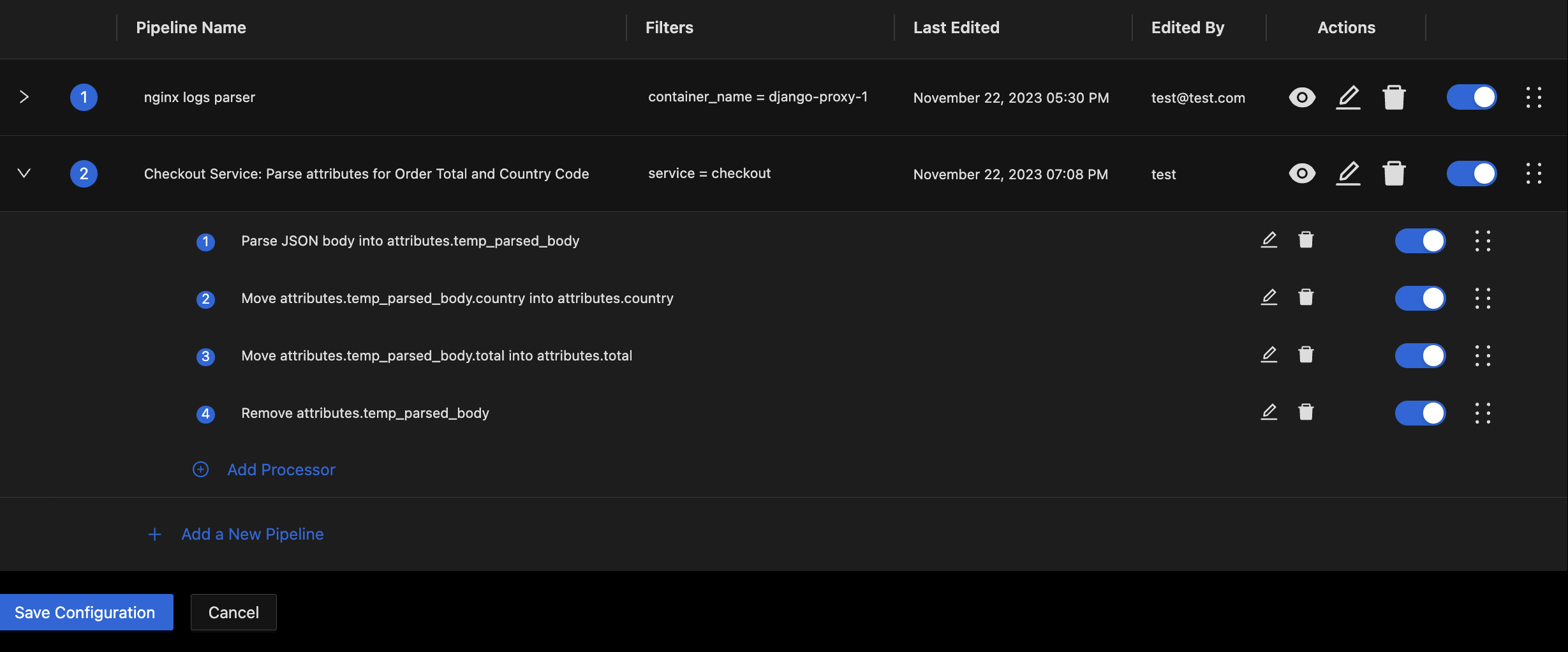

At this point you should have the pipeline ready with all necessary processors.

Before we save and deploy the pipeline, it is best to simulate processing on some sample logs to validate that the pipeline will work as expected.

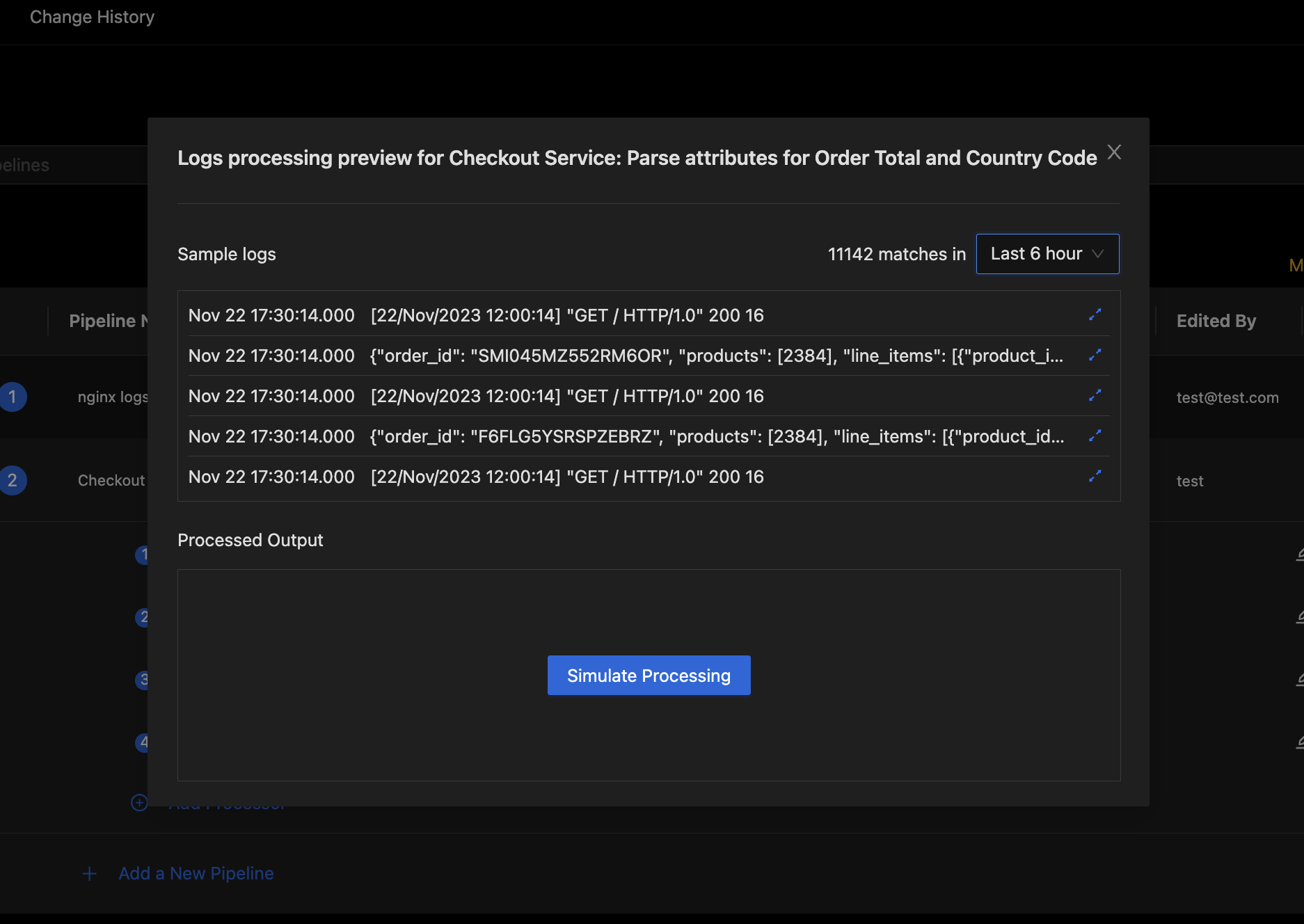

Click the "eye" icon in the actions column for the pipeline to bring up the Pipeline Preview Dialog

The preview Dialog will start out with sample logs queried from the database. You can adjust the sample logs search duration if there are no recent samples available.

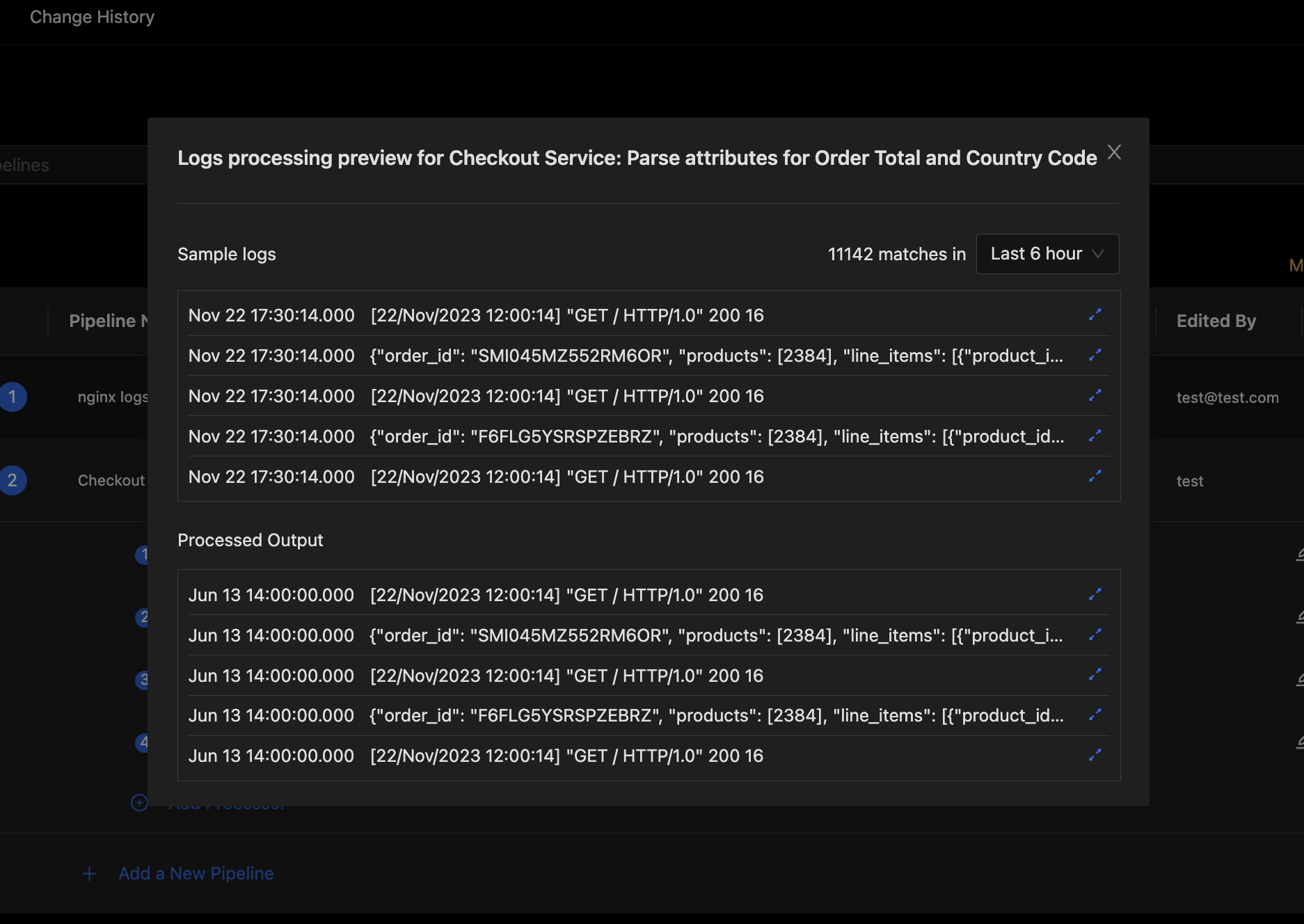

To simulate pipeline processing, press the Simulate Processing button in the bottom section of the Pipeline Preview Dialog.

This will simulate pipeline processing on the sample logs and show the output.

You can click on the expand icon on the right end of each processed log to open the detailed view for that log. Expand some of the processed logs to verify that your desired log attributes were extracted as expected.

If you see any issues, you can close the preview, edit your processors as needed and preview again to verify. Iterate on your pipeline and processor config until it all works just the way you want it.

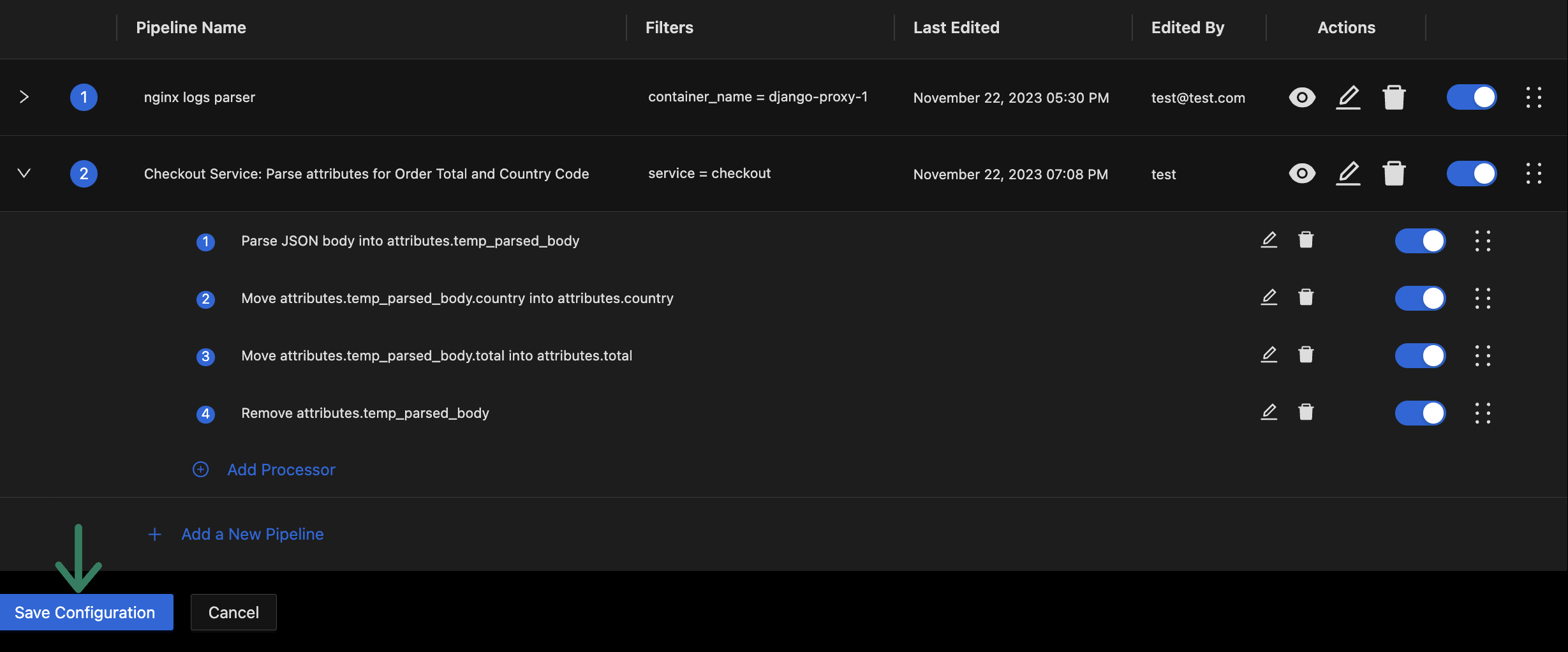

Step 5: Save Pipelines and Verify

Once you have previewed your pipeline and verified that it will work as expected, press the Save Configuration button at the bottom of the pipelines list to save pipelines. This will store the latest state of your pipelines and will deploy them for pre-processing.

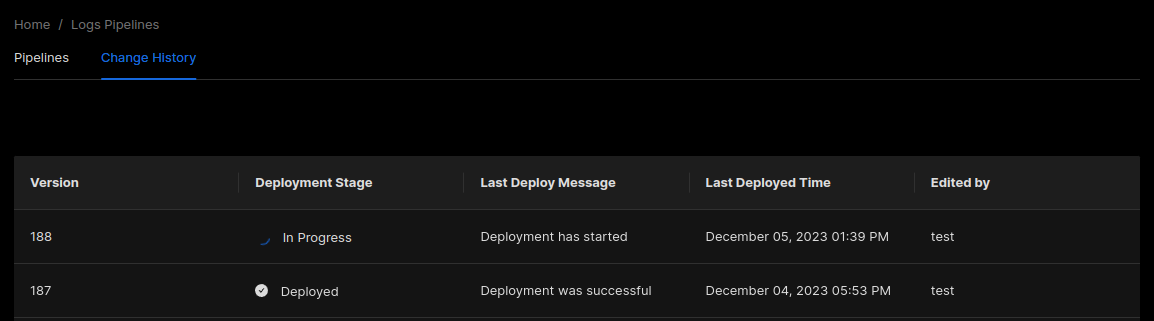

You can track the deployment status of your pipelines using the Change History tab at the top of the pipelines page.

Wait for a few minutes to let the pipelines deploy and for the latest batches of logs to get pre-processed and stored in the database. Then you can head over to the logs explorer to verify that log attributes are getting parsed out of serialized JSON in log bodies as expected.

You can now start using the new log attributes you have extracted for more efficient filtering and aggregations.